Sitemap

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Pages

Posts

Future Blog Post

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Blog Post number 4

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 3

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 2

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 1

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

portfolio

DDReach

A learning based reachability analysis for stachastic dynamical systems.

LB4TL

A novel and scalable smooth under approximation for STL quantitative semantics, with application to Neuro-symbolic training process for a satisficing Neural Feedback Policy.

STL2CBF

A learning based control systnesis for STL in the presence of model uncertainty.

STLVerNN

A deterministic formal verification framework for Signal Temporal Logics.

STL_dropout

Neural Feedback Policy training for long time horizon tasks and high dimensional systems.

publications

A comparison of stealthy sensor attacks on control systems

Published in IEEE 2018 Annual American Control Conference (ACC), 2018

As more attention is paid to security in the context of control systems and as attacks occur to real control systems throughout the world, it has become clear that some of the most nefarious attacks are those that evade detection. The term stealthy has come to encompass a variety of techniques that attackers can employ to avoid detection. Here we show how the states of the system (in particular, the reachable set corresponding to the attack) can be manipulated under two important types of stealthy attacks. We employ the chi-squared fault detection method and demonstrate how this imposes a constraint on the attack sequence either to generate no alarms (zero-alarm attack) or to generate alarms at a rate indistinguishable from normal operation (hidden attack).

Recommended citation: Hashemi, Navid, Carlos Murguia, and Justin Ruths. "A comparison of stealthy sensor attacks on control systems." 2018 Annual American Control Conference (ACC). IEEE, 2018.

Download Paper

Generalized chi-squared detector for lti systems with non-gaussian noise

Published in IEEE 2019 American Control Conference (ACC), 2019

Previously, we derived exact relationships between the properties of a linear time-invariant control system and properties of an anomaly detector that quantified the impact an attacker can have on the system if that attacker aims to remain stealthy to the detector. A necessary first step in this process is to be able to precisely tune the detector to a desired level of performance (false alarm rate) under normal operation, typically through the selection of a threshold parameter. To-date efforts have only considered Gaussian noises. Here we generalize the approach to tune a chi-squared anomaly detector for noises with non-Gaussian distributions. Our method leverages a Gaussian Mixture Model to represent the arbitrary noise distributions, which preserves analytic tractability and provides an informative interpretation in terms of a collection of chi-squared detectors and multiple Gaussian disturbances.

Recommended citation: Hashemi, Navid, and Justin Ruths. "Generalized chi-squared detector for lti systems with non-gaussian noise." 2019 American Control Conference (ACC). IEEE, 2019.

Download Paper

Filtering approaches for dealing with noise in anomaly detection

Published in 2019 IEEE 58th Conference on Decision and Control (CDC), 5356-5361, 2019

The leading workhorse of anomaly (and attack) detection in the literature has been residual-based detectors, where the residual is the discrepancy between the observed output provided by the sensors (inclusive of any tampering along the way) and the estimated output provided by an observer. These techniques calculate some statistic of the residual and apply a threshold to determine whether or not to raise an alarm. To date, these methods have not leveraged the frequency content of the residual signal in making the detection problem easier, specifically dealing with the case of (e.g., measurement) noise. Here we demonstrate some opportunities to combine filtering to enhance the performance of residual-based detectors. We also demonstrate how filtering can provide a compelling alternative to residual-based methods when paired with a robust observer. In this process, we consider the class of attacks that are stealthy, or undetectable, by such filtered detection methods and the impact they can have on the system.

Recommended citation: Hashemi, Navid, et al. "Filtering approaches for dealing with noise in anomaly detection." 2019 IEEE 58th Conference on Decision and Control (CDC). IEEE, 2019.

Download Paper

Gain design via LMIs to minimize the impact of stealthy attacks

Published in 2020 American Control Conference (ACC), 2020

The goal of this paper is to design the gain matrices for estimate-based feedback to minimize the impact that falsified sensor measurements can have on the state of a stochastic linear time invariant system. Here we consider attackers that stay stealthy, by raising no alarms, to a chi-squared anomaly detector, thereby restricting the set of attack inputs within an ellipsoidal set. We design linear matrix inequalities (LMIs) to find a tight outer ellipsoidal bound on the convex set of states reachable due to the stealthy inputs (and noise). Subsequently considering the controller and estimator gains as design variables requires further linearization to maintain the LMI structure. Without a competing performance criterion, the solution of this gain design is the trivial uncoupling of the feedback loop (setting either gain to zero). Here we consider and convexify - an output constrained covariance (OCC) ∥H∥ 2 gain constraint on the non-attacked system. Through additional tricks to linearize the combination of these LMI constraints, we propose an iterative algorithm whose core is a combined convex optimization problem to minimize the state reachable set due to the attacker while ensuring a small enough ∥H∥ 2 gain during nominal operation.

Recommended citation: Hashemi, Navid, and Justin Ruths. "Gain design via LMIs to minimize the impact of stealthy attacks." 2020 American Control Conference (ACC). IEEE, 2020.

Download Paper

Distributionally Robust Tuning of Anomaly Detectors in Cyber-Physical Systems with Stealthy Attacks

Published in IEEE 2020 American Control Conference (ACC), 2020

Designing resilient control strategies for mitigating stealthy attacks is a crucial task in emerging cyber-physical systems. In the design of anomaly detectors, it is common to assume Gaussian noise models to maintain tractability; however, this assumption can lead the actual false alarm rate to be significantly higher than expected. We propose a distributionally robust anomaly detector for noise distributions in moment-based ambiguity sets. We design a detection threshold that guarantees that the actual false alarm rate is upper bounded by the desired one by using generalized Chebyshev inequalities. Furthermore, we highlight an important tradeoff between the worst-case false alarm rate and the potential impact of a stealthy attacker by efficiently computing an outer ellipsoidal bound for the attack-reachable states corresponding to the distributionally robust detector threshold. We illustrate this trade-off with a numerical example and compare the proposed approach with a traditional chi-squared detector.

Recommended citation: Renganathan, V., Hashemi, N., Ruths, J., & Summers, T. H. (2020, July). Distributionally robust tuning of anomaly detectors in cyber-physical systems with stealthy attacks. In 2020 American Control Conference (ACC) (pp. 1247-1252). IEEE.

Download Paper

Higher-order moment-based anomaly detection

Published in IEEE Control Systems Letters, 2021

The identification of anomalies is a critical component of operating complex, large-scale and geographically distributed cyber-physical systems. While designing anomaly detectors, it is common to assume Gaussian noise models to maintain tractability; however, this assumption can lead to the actual false alarm rate being significantly higher than expected. Here we design a distributionally robust threshold of detection using finite and fixed higher-order moments of the detection measure data such that it guarantees the actual false alarm rate to be upper bounded by the desired one. Further, we bound the states reachable through the action of a stealthy attack and identify the trade-off between this impact of attacks that cannot be detected and the worst-case false alarm rate. Through numerical experiments, we illustrate how knowledge of higher-order moments results in a tightened threshold, thereby restricting an attacker’s potential impact.

Recommended citation: Renganathan, V., Hashemi, N., Ruths, J., & Summers, T. H. (2021). Higher-order moment-based anomaly detection. IEEE Control Systems Letters, 6, 211-216.

Download Paper

Performance Bounds for Neural Network Estimators: Applications in Fault Detection

Published in IEEE 2021 American Control Conference (ACC), 3260-3266, 2021

We exploit recent results in quantifying the robustness of neural networks to input variations to construct and tune a model-based anomaly detector, where the data-driven estimator model is provided by an autoregressive neural network. In tuning, we specifically provide upper bounds on the rate of false alarms expected under normal operation. To accomplish this, we provide a theory extension to allow for the propagation of multiple confidence ellipsoids through a neural network. The ellipsoid that bounds the output of the neural network under the input variation informs the sensitivity - and thus the threshold tuning - of the detector. We demonstrate this approach on a linear and nonlinear dynamical system.

Recommended citation: Hashemi, Navid, Mahyar Fazlyab, and Justin Ruths. "Performance bounds for neural network estimators: Applications in fault detection." 2021 American Control Conference (ACC). IEEE, 2021.

Download Paper

Certifying Incremental Quadratic Constraints for Neural Networks via Convex Optimization

Published in Proceedings of the 3rd Conference on Learning for Dynamics and Control, PMLR 144:842-853, 2021., 2021

Abstracting neural networks with constraints they impose on their inputs and outputs can be very useful in the analysis of neural network classifiers and to derive optimization-based algorithms for certification of stability and robustness of feedback systems involving neural networks. In this paper, we propose a convex program, in the form of a Linear Matrix Inequality (LMI), to certify quadratic bounds on the map of neural networks over a region of interest. These certificates can capture several useful properties such as (local) Lipschitz continuity, one-sided Lipschitz continuity, invertibility, and contraction. We illustrate the utility of our approach in two different settings. First, we develop a semidefinite program to compute guaranteed and sharp upper bounds on the local Lipschitz constant of neural networks and illustrate the results on random networks as well as networks trained on MNIST. Second, we consider a linear time-invariant system in feedback with an approximate model predictive controller given by a neural network. We then turn the stability analysis into a semidefinite feasibility program and estimate an ellipsoidal invariant set for the closed-loop system.

Recommended citation: Hashemi, Navid, Justin Ruths, and Mahyar Fazlyab. "Certifying incremental quadratic constraints for neural networks via convex optimization." Learning for Dynamics and Control. PMLR, 2021.

Download Paper

Practical detectors to identify worst-case attacks

Published in 2022 IEEE Conference on Control Technology and Applications (CCTA), 2022

Recent work into quantifying the impact of attacks on control systems has motivated the design of worst-case attacks that define the envelope of the attack impact possible while remaining stealthy to model-based anomaly detectors. Such attacks - although stealthy for the considered detector test - tend to produce detector statistics that are easily identifiable by the naked eye. Although seemingly obvious, human operators cannot simultaneously monitor all process control variables of a large-scale cyber-physical system. What is lacking in the literature is a set of practical detectors that can identify such unusual attacked behavior. In defining these, we enable automated detection of to-date stealthy attacks and also further constrain the impact of attacks stealthy to a set of combined detectors, both existing and new.

Recommended citation: Umsonst, D., Hashemi, N., Sandberg, H., & Ruths, J. (2022, August). Practical detectors to identify worst-case attacks. In 2022 IEEE Conference on Control Technology and Applications (CCTA) (pp. 197-204). IEEE.

Download Paper

Codesign for Resilience and Performance

Published in IEEE Transactions on Control of Network Systems, 2022

We present two optimization approaches to minimize the impact of sensor falsification attacks in linear time-invariant systems controlled by estimate-based feedback. We accomplish this by finding observer and controller gain matrices that minimize outer ellipsoidal bounds that contain the reachable set of attack-induced states. To avoid trivial solutions, we involve a covariance-based ∥H∥2 closed-loop performance constraint. This exposes a tradeoff between system security and closed-loop performance and demonstrates that only small concessions in performance can lead to large gains in our reachability-based security metric. We provide both a nonlinear optimization based on geometric sums and a fully convexified approach formulated with linear matrix inequalities. We demonstrate the effectiveness of these tools on two numerical case studies.

Recommended citation: Hashemi, Navid, and Justin Ruths. Co-design for resilience and performance. IEEE Transactions on Control of Network Systems (2022).

Download Paper

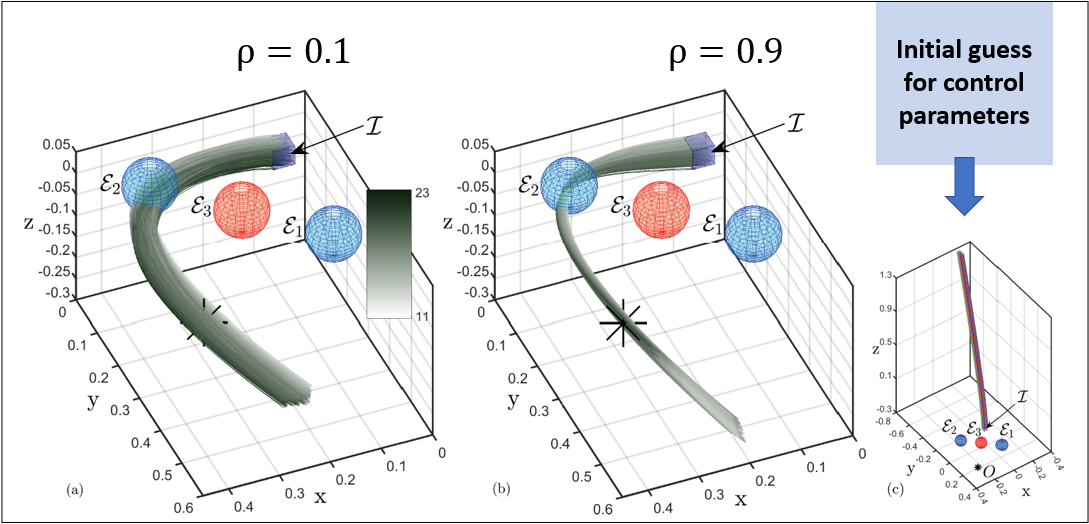

Risk-awareness in learning neural controllers for temporal logic objectives

Published in 2023 American Control Conference (ACC), 2023

In this paper, we consider the problem of synthesizing a controller in the presence of uncertainty such that the resulting closed-loop system satisfies certain hard constraints while optimizing certain (soft) performance objectives. We assume that the hard constraints encoding safety or mission-critical specifications are expressed using Signal Temporal Logic (STL), while performance is quantified using standard cost functions on system trajectories. To ensure satisfaction of the STL constraints, we algorithmically obtain control barrier functions (CBFs) from the STL specifications. We model controllers as neural networks (NNs) and provide an algorithm to train the NN parameters to simultaneously optimize the performance objectives while satisfying the CBF conditions (with a user-specified robustness margin). We evaluate the risk incurred by the trade-off between the robustness margin of the system and its performance using the formalism of risk measures. We demonstrate our approach on challenging nonlinear control examples such as quadcopter motion planning and a unicycle.

Recommended citation: Hashemi, Navid, et al. "Risk-awareness in learning neural controllers for temporal logic objectives." 2023 American Control Conference (ACC). IEEE, 2023.

Download Paper

A Neurosymbolic Approach to the Verification of Temporal Logic Properties of Learning-enabled Control Systems

Published in ICCPS 2023: Proceedings of the ACM/IEEE 14th International Conference on Cyber-Physical Systems (with CPS-IoT Week 2023), 2023

Signal Temporal Logic (STL) has become a popular tool for expressing formal requirements of Cyber-Physical Systems (CPS). The problem of verifying STL properties of neural network-controlled CPS remains a largely unexplored problem. In this paper, we present a model for the verification of Neural Network (NN) controllers for general STL specifications using a custom neural architecture where we map an STL formula into a feed-forward neural network with ReLU activation. In the case where both our plant model and the controller are ReLU-activated neural networks, we reduce the STL verification problem to reachability in ReLU neural networks. We also propose a new approach for neural network controllers with general activation functions; this approach is a sound and complete verification approach based on computing the Lipschitz constant of the closed-loop control system. We demonstrate the practical efficacy of our techniques on a number of examples of learning-enabled control systems.

Recommended citation: Hashemi, Navid, et al. "A neurosymbolic approach to the verification of temporal logic properties of learning-enabled control systems." Proceedings of the ACM/IEEE 14th International Conference on Cyber-Physical Systems (with CPS-IoT Week 2023). 2023.

Download Paper

Conformance testing for stochastic cyber-physical systems

Published in CONFERENCE ON FORMAL METHODS IN COMPUTER-AIDED DESIGN–FMCAD 2023, 2023

Conformance is defined as a measure of distance between the behaviors of two dynamical systems. The notion of conformance can accelerate system design when models of varying fidelities are available on which analysis and control design can be done more efficiently. Ultimately, conformance can capture distance between design models and their real implementations and thus aid in robust system design. In this paper, we are interested in the conformance of stochastic dynamical systems. We argue that probabilistic reasoning over the distribution of distances between model trajectories is a good measure for stochastic conformance. Additionally, we propose the non-conformance risk to reason about the risk of stochastic systems not being conformant. We show that both notions have the desirable transference property, meaning that conformant systems satisfy similar system specifications, ie, if the first model satisfies a desirable specification, the second model will satisfy (nearly) the same specification. Lastly, we propose how stochastic conformance and the non-conformance risk can be estimated from data using statistical tools such as conformal prediction. We present empirical evaluations of our method on an F-16 aircraft, an autonomous vehicle, a spacecraft, and Dubin’s vehicle.

Recommended citation: Qin, X., Hashemi, N., Lindemann, L., & Deshmukh, J. V. (2023, October). Conformance testing for stochastic cyber-physical systems. In CONFERENCE ON FORMAL METHODS IN COMPUTER-AIDED DESIGN–FMCAD 2023 (p. 294).

Download Paper

Data-Driven Reachability Analysis of Stochastic Dynamical Systems with Conformal Inference

Published in 2023 62nd IEEE Conference on Decision and Control (CDC), 2023

We consider data-driven reachability analysis of discrete-time stochastic dynamical systems using conformal inference. We assume that we are not provided with a symbolic representation of the stochastic system, but instead have access to a dataset of K-step trajectories. The reachability problem is to construct a probabilistic flowpipe such that the probability that a K-step trajectory can violate the bounds of the flowpipe does not exceed a user-specified failure probability threshold. The key ideas in this paper are: (1) to learn a surrogate predictor model from data, (2) to perform reachability analysis using the surrogate model, and (3) to quantify the surrogate model’s incurred error using conformal inference in order to give probabilistic reachability guarantees. We focus on learning-enabled control systems with complex closed-loop dynamics that are difficult to model symbolically, but where state transition pairs can be queried, e.g., using a simulator. We demonstrate the applicability of our method on examples from the domain of learning-enabled cyber-physical systems.

Recommended citation: Hashemi, Navid, et al. "Data-Driven Reachability Analysis of Stochastic Dynamical Systems with Conformal Inference." 2023 62nd IEEE Conference on Decision and Control (CDC). IEEE, 2023.

Download Paper

Convex Optimization-based Policy Adaptation to Compensate for Distributional Shifts

Published in 2023 62nd IEEE Conference on Decision and Control (CDC), 2023

In many real-world cyber-physical systems, control designers often model the dynamics of the physical components using stochastic dynamical equations, and the design optimal control policies for the model. At any given time, a stochastic difference equation essentially models the distribution on next states conditioned on the state and controller action at that time. Due to shifts in this distribution, modeling assumptions on the stochastic dynamics made during initial control design may no longer be valid when the system is deployed in the real-world. In safety-critical systems, this can be particularly problematic; even if the system follows the designed control trajectory that was deemed safe and optimal, it may reach unsafe states due to the distribution shift. In this paper, we address the following problem: suppose we obtain an optimal control trajectory in the training environment, how do we ensure that in the real system this optimal trajectory is tracked with minimal error? In other words, we wish to adapt an optimal trained policy to distribution shifts in the environment. We show that this problem can be cast as a nonlinear optimization problem solvable using heuristic optimization methods. However, a convex relaxation of this problem allows us to learn policies that track the optimal trajectory with much better error performance and faster computation times. We demonstrate the efficacy of our approach on two different case studies: optimal path tracking using a Dubin’s car model, and collision avoidance using both a linear and nonlinear model for adaptive cruise control

Recommended citation: Hashemi, Navid, Justin Ruths, and Jyotirmoy V. Deshmukh. "Convex Optimization-based Policy Adaptation to Compensate for Distributional Shifts." 2023 62nd IEEE Conference on Decision and Control (CDC). IEEE, 2023.

Download Paper

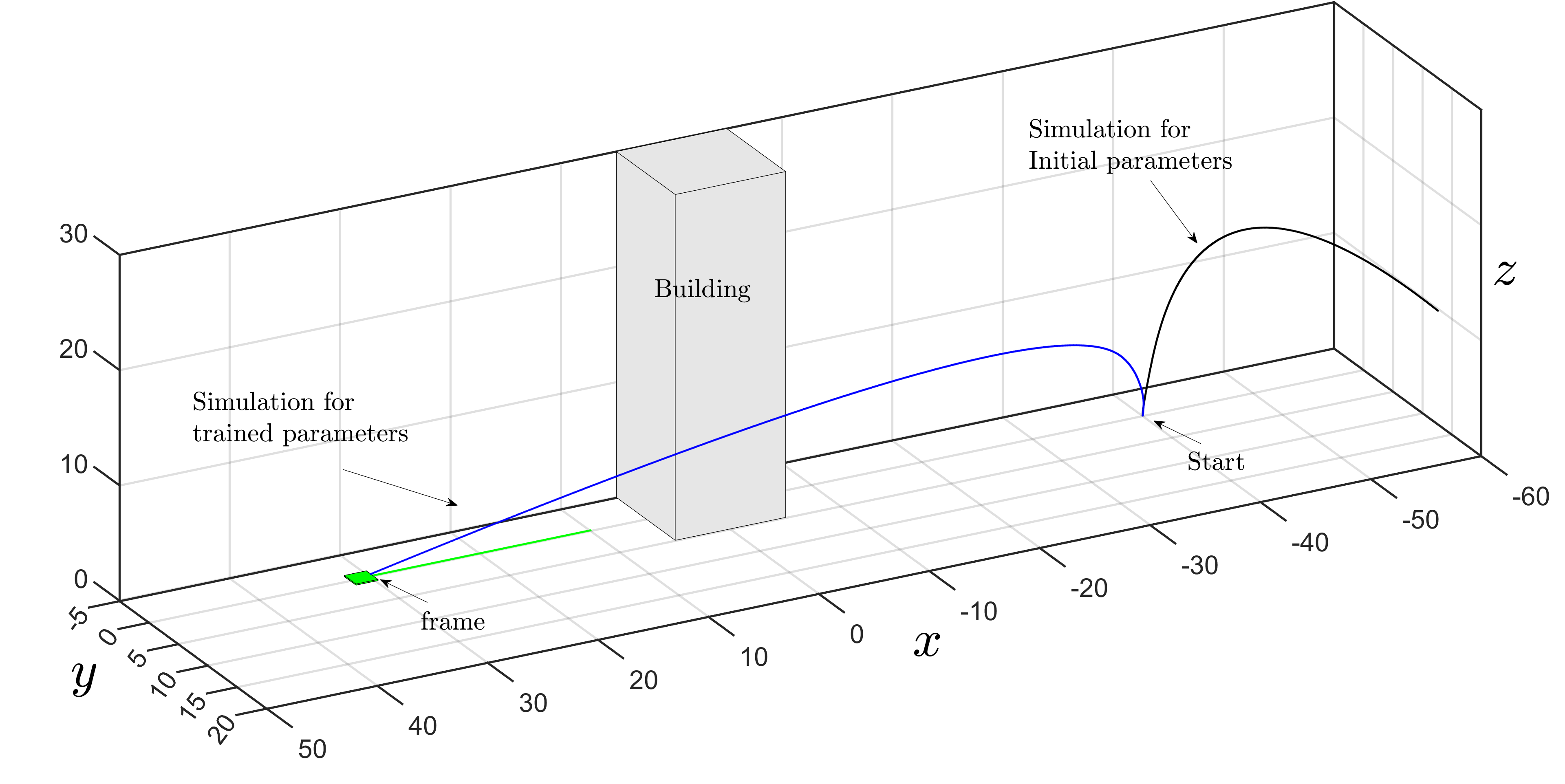

Scaling Learning based Policy Optimization for Temporal Tasks via Dropout

Published in ACM Transactions on Cyber-Physical Systems, 2024

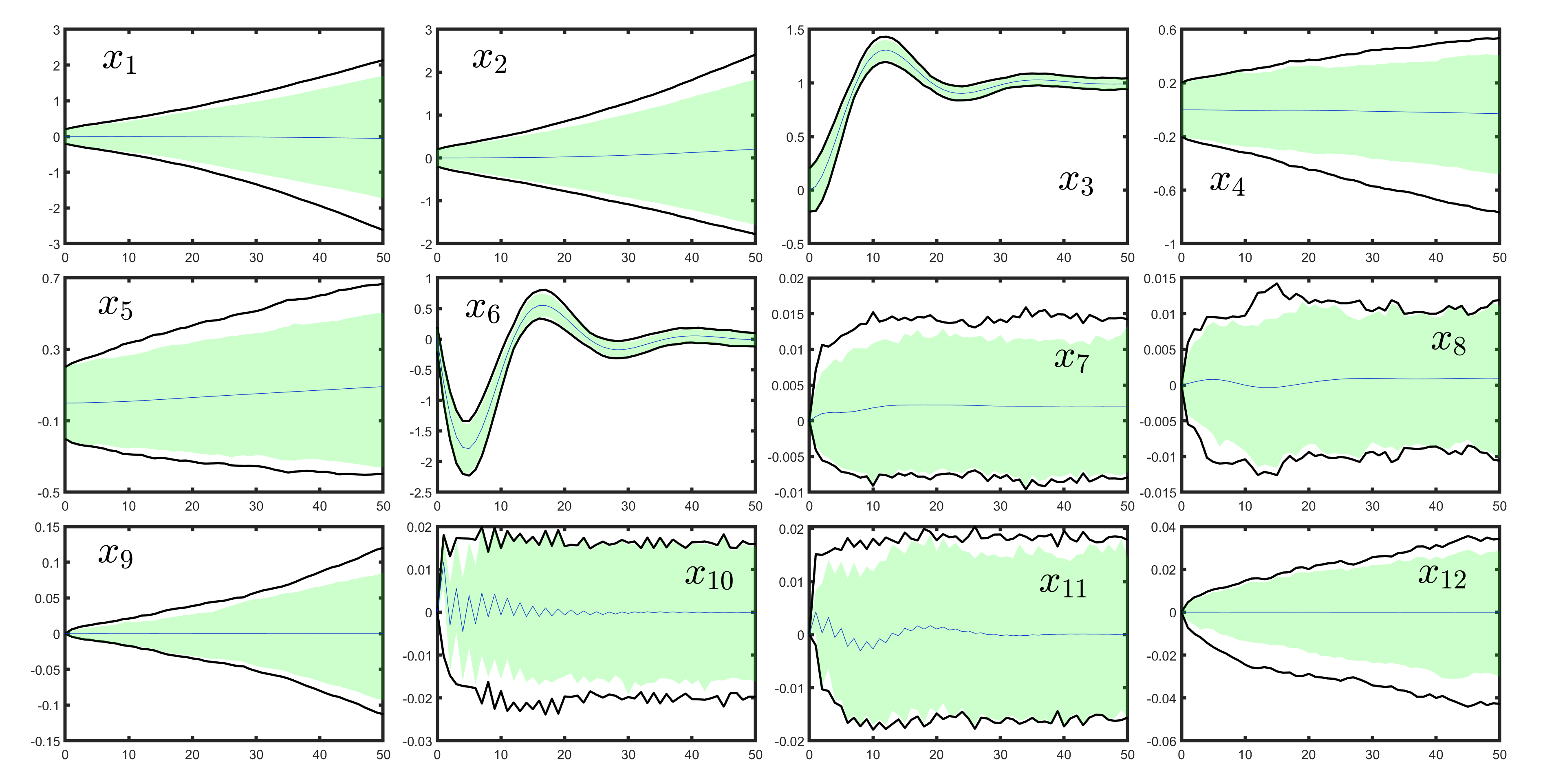

This paper introduces a model-based approach for training feedback controllers for an autonomous agent operating in a highly nonlinear environment. We desire the trained policy to ensure that the agent satisfies specific task objectives, expressed in discrete-time Signal Temporal Logic (DT-STL). One advantage for reformulation of a task via formal frameworks, like DT-STL, is that it permits quantitative satisfaction semantics. In other words, given a trajectory and a DT-STL formula, we can compute the robustness, which can be interpreted as an approximate signed distance between the trajectory and the set of trajectories satisfying the formula. We utilize feedback controllers, and we assume a feed forward neural network for learning these feedback controllers. We show how this learning problem is similar to training recurrent neural networks (RNNs), where the number of recurrent units is proportional to the temporal horizon of the agent’s task objectives. This poses a challenge: RNNs are susceptible to vanishing and exploding gradients, and naïve gradient descent-based strategies to solve long-horizon task objectives thus suffer from the same problems. To tackle this challenge, we introduce a novel gradient approximation algorithm based on the idea of dropout or gradient sampling. We show that, the existing smooth semantics for robustness are inefficient regarding gradient computation when the specification becomes complex. To address this challenge, we propose a new smooth semantics for DT-STL that under-approximates the robustness value and scales well for backpropagation over a complex specification. We show that our control synthesis methodology, can be quite helpful for stochastic gradient descent to converge with less numerical issues, enabling scalable backpropagation over long time horizons and trajectories over high dimensional state spaces.

Recommended citation: Hashemi, Navid, et al. "Scaling Learning based Policy Optimization for Temporal Tasks via Dropout." arXiv preprint arXiv:2403.15826 (2024).

Download Paper

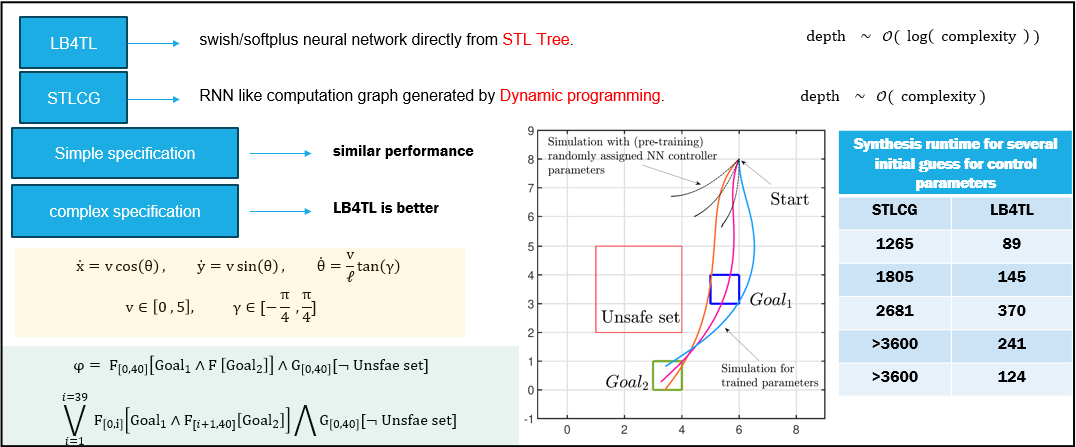

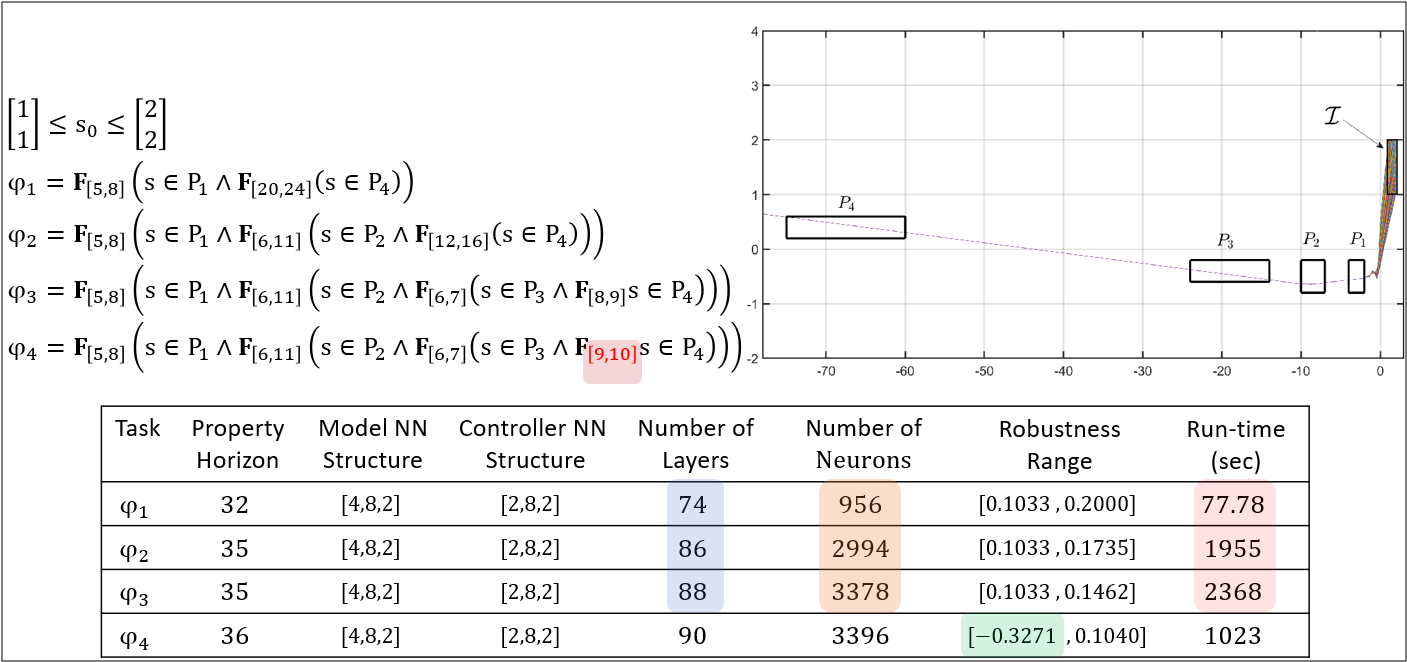

LB4TL: Smooth Semantics for Temporal Logic for Scalable Training of Neural Feedback Controllers.

Published in IFAC-PapersOnline, 2024

This paper presents a model-based framework for training neural network (NN)-based feedback controllers for autonomous agents with deterministic nonlinear dynamics to satisfy task objectives and safety constraints expressed in discrete-time Signal Temporal Logic (DT-STL). The DT-STL framework uses a robustness function to quantify the degree of satisfaction of a given DT-STL formula by a system trajectory. This robustness function serves a dual purpose: system verification through robustness minimization and control synthesis by maximizing robustness. However, the complexity of the robustness function due to its recursive definition, non-convexity, and non-differentiability poses challenges when it is used to train neural network (NN)-based feedback controllers. This paper introduces a smooth computation graph to encode DT-STL robustness in a neurosymbolic framework. The computation graph represents a smooth approximation of the robustness enabling the use of powerful optimization algorithms based on stochastic gradient descent and backpropagation to train NN feedback controllers. Our approximation guarantees that it lower bounds the robustness value of a given DT-STL formula, and shows orders of magnitude improvement over existing smooth approximations in the literature when applied to the NN feedback control synthesis task. We demonstrate the efficacy of this approach across various planning applications that require satisfaction of complex spatio-temporal and sequential tasks, and show that it scales well with formula complexity and size of the state-space.

Recommended citation: Hashemi, Navid, et al. "Scaling Learning based Policy Optimization for Temporal Tasks via Dropout." arXiv preprint arXiv:2403.15826 (2024).

Download Paper

talks

LB4TL: Smooth Semantics for Temporal Logic for Scalable Training of Neural Feedback Controllers

Published:

teaching

Teaching experience 1

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Teaching experience 2

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.